New upstream version 1.49.0

Andrej Shadura

2 years ago

| 373 | 373 | working-directory: complement/dockerfiles |

| 374 | 374 | |

| 375 | 375 | # Run Complement |

| 376 | - run: go test -v -tags synapse_blacklist,msc2403,msc2946,msc3083 ./tests/... | |

| 376 | - run: go test -v -tags synapse_blacklist,msc2403 ./tests/... | |

| 377 | 377 | env: |

| 378 | 378 | COMPLEMENT_BASE_IMAGE: complement-synapse:latest |

| 379 | 379 | working-directory: complement |

| 0 | Synapse 1.49.0 (2021-12-14) | |

| 1 | =========================== | |

| 2 | ||

| 3 | No significant changes since version 1.49.0rc1. | |

| 4 | ||

| 5 | ||

| 6 | Support for Ubuntu 21.04 ends next month on the 20th of January | |

| 7 | --------------------------------------------------------------- | |

| 8 | ||

| 9 | For users of Ubuntu 21.04 (Hirsute Hippo), please be aware that [upstream support for this version of Ubuntu will end next month][Ubuntu2104EOL]. | |

| 10 | We will stop producing packages for Ubuntu 21.04 after upstream support ends. | |

| 11 | ||

| 12 | [Ubuntu2104EOL]: https://lists.ubuntu.com/archives/ubuntu-announce/2021-December/000275.html | |

| 13 | ||

| 14 | ||

| 15 | The wiki has been migrated to the documentation website | |

| 16 | ------------------------------------------------------- | |

| 17 | ||

| 18 | We've decided to move the existing, somewhat stagnant pages from the GitHub wiki | |

| 19 | to the [documentation website](https://matrix-org.github.io/synapse/latest/). | |

| 20 | ||

| 21 | This was done for two reasons. The first was to ensure that changes are checked by | |

| 22 | multiple authors before being committed (everyone makes mistakes!) and the second | |

| 23 | was visibility of the documentation. Not everyone knows that Synapse has some very | |

| 24 | useful information hidden away in its GitHub wiki pages. Bringing them to the | |

| 25 | documentation website should help with visibility, as well as keep all Synapse documentation | |

| 26 | in one, easily-searchable location. | |

| 27 | ||

| 28 | Note that contributions to the documentation website happen through [GitHub pull | |

| 29 | requests](https://github.com/matrix-org/synapse/pulls). Please visit [#synapse-dev:matrix.org](https://matrix.to/#/#synapse-dev:matrix.org) | |

| 30 | if you need help with the process! | |

| 31 | ||

| 32 | ||

| 33 | Synapse 1.49.0rc1 (2021-12-07) | |

| 34 | ============================== | |

| 35 | ||

| 36 | Features | |

| 37 | -------- | |

| 38 | ||

| 39 | - Add [MSC3030](https://github.com/matrix-org/matrix-doc/pull/3030) experimental client and federation API endpoints to get the closest event to a given timestamp. ([\#9445](https://github.com/matrix-org/synapse/issues/9445)) | |

| 40 | - Include bundled relation aggregations during a limited `/sync` request and `/relations` request, per [MSC2675](https://github.com/matrix-org/matrix-doc/pull/2675). ([\#11284](https://github.com/matrix-org/synapse/issues/11284), [\#11478](https://github.com/matrix-org/synapse/issues/11478)) | |

| 41 | - Add plugin support for controlling database background updates. ([\#11306](https://github.com/matrix-org/synapse/issues/11306), [\#11475](https://github.com/matrix-org/synapse/issues/11475), [\#11479](https://github.com/matrix-org/synapse/issues/11479)) | |

| 42 | - Support the stable API endpoints for [MSC2946](https://github.com/matrix-org/matrix-doc/pull/2946): the room `/hierarchy` endpoint. ([\#11329](https://github.com/matrix-org/synapse/issues/11329)) | |

| 43 | - Add admin API to get some information about federation status with remote servers. ([\#11407](https://github.com/matrix-org/synapse/issues/11407)) | |

| 44 | - Support expiry of refresh tokens and expiry of the overall session when refresh tokens are in use. ([\#11425](https://github.com/matrix-org/synapse/issues/11425)) | |

| 45 | - Stabilise support for [MSC2918](https://github.com/matrix-org/matrix-doc/blob/main/proposals/2918-refreshtokens.md#msc2918-refresh-tokens) refresh tokens as they have now been merged into the Matrix specification. ([\#11435](https://github.com/matrix-org/synapse/issues/11435), [\#11522](https://github.com/matrix-org/synapse/issues/11522)) | |

| 46 | - Update [MSC2918 refresh token](https://github.com/matrix-org/matrix-doc/blob/main/proposals/2918-refreshtokens.md#msc2918-refresh-tokens) support to confirm with the latest revision: accept the `refresh_tokens` parameter in the request body rather than in the URL parameters. ([\#11430](https://github.com/matrix-org/synapse/issues/11430)) | |

| 47 | - Support configuring the lifetime of non-refreshable access tokens separately to refreshable access tokens. ([\#11445](https://github.com/matrix-org/synapse/issues/11445)) | |

| 48 | - Expose `synapse_homeserver` and `synapse_worker` commands as entry points to run Synapse's main process and worker processes, respectively. Contributed by @Ma27. ([\#11449](https://github.com/matrix-org/synapse/issues/11449)) | |

| 49 | - `synctl stop` will now wait for Synapse to exit before returning. ([\#11459](https://github.com/matrix-org/synapse/issues/11459), [\#11490](https://github.com/matrix-org/synapse/issues/11490)) | |

| 50 | - Extend the "delete room" admin api to work correctly on rooms which have previously been partially deleted. ([\#11523](https://github.com/matrix-org/synapse/issues/11523)) | |

| 51 | - Add support for the `/_matrix/client/v3/login/sso/redirect/{idpId}` API from Matrix v1.1. This endpoint was overlooked when support for v3 endpoints was added in Synapse 1.48.0rc1. ([\#11451](https://github.com/matrix-org/synapse/issues/11451)) | |

| 52 | ||

| 53 | ||

| 54 | Bugfixes | |

| 55 | -------- | |

| 56 | ||

| 57 | - Fix using [MSC2716](https://github.com/matrix-org/matrix-doc/pull/2716) batch sending in combination with event persistence workers. Contributed by @tulir at Beeper. ([\#11220](https://github.com/matrix-org/synapse/issues/11220)) | |

| 58 | - Fix a long-standing bug where all requests that read events from the database could get stuck as a result of losing the database connection, properly this time. Also fix a race condition introduced in the previous insufficient fix in Synapse 1.47.0. ([\#11376](https://github.com/matrix-org/synapse/issues/11376)) | |

| 59 | - The `/send_join` response now includes the stable `event` field instead of the unstable field from [MSC3083](https://github.com/matrix-org/matrix-doc/pull/3083). ([\#11413](https://github.com/matrix-org/synapse/issues/11413)) | |

| 60 | - Fix a bug introduced in Synapse 1.47.0 where `send_join` could fail due to an outdated `ijson` version. ([\#11439](https://github.com/matrix-org/synapse/issues/11439), [\#11441](https://github.com/matrix-org/synapse/issues/11441), [\#11460](https://github.com/matrix-org/synapse/issues/11460)) | |

| 61 | - Fix a bug introduced in Synapse 1.36.0 which could cause problems fetching event-signing keys from trusted key servers. ([\#11440](https://github.com/matrix-org/synapse/issues/11440)) | |

| 62 | - Fix a bug introduced in Synapse 1.47.1 where the media repository would fail to work if the media store path contained any symbolic links. ([\#11446](https://github.com/matrix-org/synapse/issues/11446)) | |

| 63 | - Fix an `LruCache` corruption bug, introduced in Synapse 1.38.0, that would cause certain requests to fail until the next Synapse restart. ([\#11454](https://github.com/matrix-org/synapse/issues/11454)) | |

| 64 | - Fix a long-standing bug where invites from ignored users were included in incremental syncs. ([\#11511](https://github.com/matrix-org/synapse/issues/11511)) | |

| 65 | - Fix a regression in Synapse 1.48.0 where presence workers would not clear their presence updates over replication on shutdown. ([\#11518](https://github.com/matrix-org/synapse/issues/11518)) | |

| 66 | - Fix a regression in Synapse 1.48.0 where the module API's `looping_background_call` method would spam errors to the logs when given a non-async function. ([\#11524](https://github.com/matrix-org/synapse/issues/11524)) | |

| 67 | ||

| 68 | ||

| 69 | Updates to the Docker image | |

| 70 | --------------------------- | |

| 71 | ||

| 72 | - Update `Dockerfile-workers` to healthcheck all workers in the container. ([\#11429](https://github.com/matrix-org/synapse/issues/11429)) | |

| 73 | ||

| 74 | ||

| 75 | Improved Documentation | |

| 76 | ---------------------- | |

| 77 | ||

| 78 | - Update the media repository documentation. ([\#11415](https://github.com/matrix-org/synapse/issues/11415)) | |

| 79 | - Update section about backward extremities in the room DAG concepts doc to correct the misconception about backward extremities indicating whether we have fetched an events' `prev_events`. ([\#11469](https://github.com/matrix-org/synapse/issues/11469)) | |

| 80 | ||

| 81 | ||

| 82 | Internal Changes | |

| 83 | ---------------- | |

| 84 | ||

| 85 | - Add `Final` annotation to string constants in `synapse.api.constants` so that they get typed as `Literal`s. ([\#11356](https://github.com/matrix-org/synapse/issues/11356)) | |

| 86 | - Add a check to ensure that users cannot start the Synapse master process when `worker_app` is set. ([\#11416](https://github.com/matrix-org/synapse/issues/11416)) | |

| 87 | - Add a note about postgres memory management and hugepages to postgres doc. ([\#11467](https://github.com/matrix-org/synapse/issues/11467)) | |

| 88 | - Add missing type hints to `synapse.config` module. ([\#11465](https://github.com/matrix-org/synapse/issues/11465)) | |

| 89 | - Add missing type hints to `synapse.federation`. ([\#11483](https://github.com/matrix-org/synapse/issues/11483)) | |

| 90 | - Add type annotations to `tests.storage.test_appservice`. ([\#11488](https://github.com/matrix-org/synapse/issues/11488), [\#11492](https://github.com/matrix-org/synapse/issues/11492)) | |

| 91 | - Add type annotations to some of the configuration surrounding refresh tokens. ([\#11428](https://github.com/matrix-org/synapse/issues/11428)) | |

| 92 | - Add type hints to `synapse/tests/rest/admin`. ([\#11501](https://github.com/matrix-org/synapse/issues/11501)) | |

| 93 | - Add type hints to storage classes. ([\#11411](https://github.com/matrix-org/synapse/issues/11411)) | |

| 94 | - Add wiki pages to documentation website. ([\#11402](https://github.com/matrix-org/synapse/issues/11402)) | |

| 95 | - Clean up `tests.storage.test_main` to remove use of legacy code. ([\#11493](https://github.com/matrix-org/synapse/issues/11493)) | |

| 96 | - Clean up `tests.test_visibility` to remove legacy code. ([\#11495](https://github.com/matrix-org/synapse/issues/11495)) | |

| 97 | - Convert status codes to `HTTPStatus` in `synapse.rest.admin`. ([\#11452](https://github.com/matrix-org/synapse/issues/11452), [\#11455](https://github.com/matrix-org/synapse/issues/11455)) | |

| 98 | - Extend the `scripts-dev/sign_json` script to support signing events. ([\#11486](https://github.com/matrix-org/synapse/issues/11486)) | |

| 99 | - Improve internal types in push code. ([\#11409](https://github.com/matrix-org/synapse/issues/11409)) | |

| 100 | - Improve type annotations in `synapse.module_api`. ([\#11029](https://github.com/matrix-org/synapse/issues/11029)) | |

| 101 | - Improve type hints for `LruCache`. ([\#11453](https://github.com/matrix-org/synapse/issues/11453)) | |

| 102 | - Preparation for database schema simplifications: disambiguate queries on `state_key`. ([\#11497](https://github.com/matrix-org/synapse/issues/11497)) | |

| 103 | - Refactor `backfilled` into specific behavior function arguments (`_persist_events_and_state_updates` and downstream calls). ([\#11417](https://github.com/matrix-org/synapse/issues/11417)) | |

| 104 | - Refactor `get_version_string` to fix-up types and duplicated code. ([\#11468](https://github.com/matrix-org/synapse/issues/11468)) | |

| 105 | - Refactor various parts of the `/sync` handler. ([\#11494](https://github.com/matrix-org/synapse/issues/11494), [\#11515](https://github.com/matrix-org/synapse/issues/11515)) | |

| 106 | - Remove unnecessary `json.dumps` from `tests.rest.admin`. ([\#11461](https://github.com/matrix-org/synapse/issues/11461)) | |

| 107 | - Save the OpenID Connect session ID on login. ([\#11482](https://github.com/matrix-org/synapse/issues/11482)) | |

| 108 | - Update and clean up recently ported documentation pages. ([\#11466](https://github.com/matrix-org/synapse/issues/11466)) | |

| 109 | ||

| 110 | ||

| 0 | 111 | Synapse 1.48.0 (2021-11-30) |

| 1 | 112 | =========================== |

| 2 | 113 |

| 0 | matrix-synapse-py3 (1.49.0) stable; urgency=medium | |

| 1 | ||

| 2 | * New synapse release 1.49.0. | |

| 3 | ||

| 4 | -- Synapse Packaging team <packages@matrix.org> Tue, 14 Dec 2021 12:39:46 +0000 | |

| 5 | ||

| 6 | matrix-synapse-py3 (1.49.0~rc1) stable; urgency=medium | |

| 7 | ||

| 8 | * New synapse release 1.49.0~rc1. | |

| 9 | ||

| 10 | -- Synapse Packaging team <packages@matrix.org> Tue, 07 Dec 2021 13:52:21 +0000 | |

| 11 | ||

| 0 | 12 | matrix-synapse-py3 (1.48.0) stable; urgency=medium |

| 1 | 13 | |

| 2 | 14 | * New synapse release 1.48.0. |

| 20 | 20 | # files to run the desired worker configuration. Will start supervisord. |

| 21 | 21 | COPY ./docker/configure_workers_and_start.py /configure_workers_and_start.py |

| 22 | 22 | ENTRYPOINT ["/configure_workers_and_start.py"] |

| 23 | ||

| 24 | HEALTHCHECK --start-period=5s --interval=15s --timeout=5s \ | |

| 25 | CMD /bin/sh /healthcheck.sh |

| 0 | #!/bin/sh | |

| 1 | # This healthcheck script is designed to return OK when every | |

| 2 | # host involved returns OK | |

| 3 | {%- for healthcheck_url in healthcheck_urls %} | |

| 4 | curl -fSs {{ healthcheck_url }} || exit 1 | |

| 5 | {%- endfor %} |

| 473 | 473 | |

| 474 | 474 | # Determine the load-balancing upstreams to configure |

| 475 | 475 | nginx_upstream_config = "" |

| 476 | ||

| 477 | # At the same time, prepare a list of internal endpoints to healthcheck | |

| 478 | # starting with the main process which exists even if no workers do. | |

| 479 | healthcheck_urls = ["http://localhost:8080/health"] | |

| 480 | ||

| 476 | 481 | for upstream_worker_type, upstream_worker_ports in nginx_upstreams.items(): |

| 477 | 482 | body = "" |

| 478 | 483 | for port in upstream_worker_ports: |

| 479 | 484 | body += " server localhost:%d;\n" % (port,) |

| 485 | healthcheck_urls.append("http://localhost:%d/health" % (port,)) | |

| 480 | 486 | |

| 481 | 487 | # Add to the list of configured upstreams |

| 482 | 488 | nginx_upstream_config += NGINX_UPSTREAM_CONFIG_BLOCK.format( |

| 509 | 515 | worker_config=supervisord_config, |

| 510 | 516 | ) |

| 511 | 517 | |

| 518 | # healthcheck config | |

| 519 | convert( | |

| 520 | "/conf/healthcheck.sh.j2", | |

| 521 | "/healthcheck.sh", | |

| 522 | healthcheck_urls=healthcheck_urls, | |

| 523 | ) | |

| 524 | ||

| 512 | 525 | # Ensure the logging directory exists |

| 513 | 526 | log_dir = data_dir + "/logs" |

| 514 | 527 | if not os.path.exists(log_dir): |

| 43 | 43 | - [Presence router callbacks](modules/presence_router_callbacks.md) |

| 44 | 44 | - [Account validity callbacks](modules/account_validity_callbacks.md) |

| 45 | 45 | - [Password auth provider callbacks](modules/password_auth_provider_callbacks.md) |

| 46 | - [Background update controller callbacks](modules/background_update_controller_callbacks.md) | |

| 46 | 47 | - [Porting a legacy module to the new interface](modules/porting_legacy_module.md) |

| 47 | 48 | - [Workers](workers.md) |

| 48 | 49 | - [Using `synctl` with Workers](synctl_workers.md) |

| 63 | 64 | - [Statistics](admin_api/statistics.md) |

| 64 | 65 | - [Users](admin_api/user_admin_api.md) |

| 65 | 66 | - [Server Version](admin_api/version_api.md) |

| 67 | - [Federation](usage/administration/admin_api/federation.md) | |

| 66 | 68 | - [Manhole](manhole.md) |

| 67 | 69 | - [Monitoring](metrics-howto.md) |

| 70 | - [Understanding Synapse Through Grafana Graphs](usage/administration/understanding_synapse_through_grafana_graphs.md) | |

| 71 | - [Useful SQL for Admins](usage/administration/useful_sql_for_admins.md) | |

| 72 | - [Database Maintenance Tools](usage/administration/database_maintenance_tools.md) | |

| 73 | - [State Groups](usage/administration/state_groups.md) | |

| 68 | 74 | - [Request log format](usage/administration/request_log.md) |

| 75 | - [Admin FAQ](usage/administration/admin_faq.md) | |

| 69 | 76 | - [Scripts]() |

| 70 | 77 | |

| 71 | 78 | # Development |

| 93 | 100 | |

| 94 | 101 | # Other |

| 95 | 102 | - [Dependency Deprecation Policy](deprecation_policy.md) |

| 103 | - [Running Synapse on a Single-Board Computer](other/running_synapse_on_single_board_computers.md) | |

| 37 | 37 | The forward extremities of a room are used as the `prev_events` when the next event is sent. |

| 38 | 38 | |

| 39 | 39 | |

| 40 | ## Backwards extremity | |

| 40 | ## Backward extremity | |

| 41 | 41 | |

| 42 | 42 | The current marker of where we have backfilled up to and will generally be the |

| 43 | oldest-in-time events we know of in the DAG. | |

| 43 | `prev_events` of the oldest-in-time events we have in the DAG. This gives a starting point when | |

| 44 | backfilling history. | |

| 44 | 45 | |

| 45 | This is an event where we haven't fetched all of the `prev_events` for. | |

| 46 | ||

| 47 | Once we have fetched all of its `prev_events`, it's unmarked as a backwards | |

| 48 | extremity (although we may have formed new backwards extremities from the prev | |

| 49 | events during the backfilling process). | |

| 46 | When we persist a non-outlier event, we clear it as a backward extremity and set | |

| 47 | all of its `prev_events` as the new backward extremities if they aren't already | |

| 48 | persisted in the `events` table. | |

| 50 | 49 | |

| 51 | 50 | |

| 52 | 51 | ## Outliers |

| 55 | 54 | room at that point in the DAG yet. |

| 56 | 55 | |

| 57 | 56 | We won't *necessarily* have the `prev_events` of an `outlier` in the database, |

| 58 | but it's entirely possible that we *might*. The status of whether we have all of | |

| 59 | the `prev_events` is marked as a [backwards extremity](#backwards-extremity). | |

| 57 | but it's entirely possible that we *might*. | |

| 60 | 58 | |

| 61 | 59 | For example, when we fetch the event auth chain or state for a given event, we |

| 62 | 60 | mark all of those claimed auth events as outliers because we haven't done the |

| 1 | 1 | |

| 2 | 2 | *Synapse implementation-specific details for the media repository* |

| 3 | 3 | |

| 4 | The media repository is where attachments and avatar photos are stored. | |

| 5 | It stores attachment content and thumbnails for media uploaded by local users. | |

| 6 | It caches attachment content and thumbnails for media uploaded by remote users. | |

| 4 | The media repository | |

| 5 | * stores avatars, attachments and their thumbnails for media uploaded by local | |

| 6 | users. | |

| 7 | * caches avatars, attachments and their thumbnails for media uploaded by remote | |

| 8 | users. | |

| 9 | * caches resources and thumbnails used for | |

| 10 | [URL previews](development/url_previews.md). | |

| 7 | 11 | |

| 8 | ## Storage | |

| 12 | All media in Matrix can be identified by a unique | |

| 13 | [MXC URI](https://spec.matrix.org/latest/client-server-api/#matrix-content-mxc-uris), | |

| 14 | consisting of a server name and media ID: | |

| 15 | ``` | |

| 16 | mxc://<server-name>/<media-id> | |

| 17 | ``` | |

| 9 | 18 | |

| 10 | Each item of media is assigned a `media_id` when it is uploaded. | |

| 11 | The `media_id` is a randomly chosen, URL safe 24 character string. | |

| 19 | ## Local Media | |

| 20 | Synapse generates 24 character media IDs for content uploaded by local users. | |

| 21 | These media IDs consist of upper and lowercase letters and are case-sensitive. | |

| 22 | Other homeserver implementations may generate media IDs differently. | |

| 12 | 23 | |

| 13 | Metadata such as the MIME type, upload time and length are stored in the | |

| 14 | sqlite3 database indexed by `media_id`. | |

| 24 | Local media is recorded in the `local_media_repository` table, which includes | |

| 25 | metadata such as MIME types, upload times and file sizes. | |

| 26 | Note that this table is shared by the URL cache, which has a different media ID | |

| 27 | scheme. | |

| 15 | 28 | |

| 16 | Content is stored on the filesystem under a `"local_content"` directory. | |

| 29 | ### Paths | |

| 30 | A file with media ID `aabbcccccccccccccccccccc` and its `128x96` `image/jpeg` | |

| 31 | thumbnail, created by scaling, would be stored at: | |

| 32 | ``` | |

| 33 | local_content/aa/bb/cccccccccccccccccccc | |

| 34 | local_thumbnails/aa/bb/cccccccccccccccccccc/128-96-image-jpeg-scale | |

| 35 | ``` | |

| 17 | 36 | |

| 18 | Thumbnails are stored under a `"local_thumbnails"` directory. | |

| 37 | ## Remote Media | |

| 38 | When media from a remote homeserver is requested from Synapse, it is assigned | |

| 39 | a local `filesystem_id`, with the same format as locally-generated media IDs, | |

| 40 | as described above. | |

| 19 | 41 | |

| 20 | The item with `media_id` `"aabbccccccccdddddddddddd"` is stored under | |

| 21 | `"local_content/aa/bb/ccccccccdddddddddddd"`. Its thumbnail with width | |

| 22 | `128` and height `96` and type `"image/jpeg"` is stored under | |

| 23 | `"local_thumbnails/aa/bb/ccccccccdddddddddddd/128-96-image-jpeg"` | |

| 42 | A record of remote media is stored in the `remote_media_cache` table, which | |

| 43 | can be used to map remote MXC URIs (server names and media IDs) to local | |

| 44 | `filesystem_id`s. | |

| 24 | 45 | |

| 25 | Remote content is cached under `"remote_content"` directory. Each item of | |

| 26 | remote content is assigned a local `"filesystem_id"` to ensure that the | |

| 27 | directory structure `"remote_content/server_name/aa/bb/ccccccccdddddddddddd"` | |

| 28 | is appropriate. Thumbnails for remote content are stored under | |

| 29 | `"remote_thumbnail/server_name/..."` | |

| 46 | ### Paths | |

| 47 | A file from `matrix.org` with `filesystem_id` `aabbcccccccccccccccccccc` and its | |

| 48 | `128x96` `image/jpeg` thumbnail, created by scaling, would be stored at: | |

| 49 | ``` | |

| 50 | remote_content/matrix.org/aa/bb/cccccccccccccccccccc | |

| 51 | remote_thumbnail/matrix.org/aa/bb/cccccccccccccccccccc/128-96-image-jpeg-scale | |

| 52 | ``` | |

| 53 | Older thumbnails may omit the thumbnailing method: | |

| 54 | ``` | |

| 55 | remote_thumbnail/matrix.org/aa/bb/cccccccccccccccccccc/128-96-image-jpeg | |

| 56 | ``` | |

| 57 | ||

| 58 | Note that `remote_thumbnail/` does not have an `s`. | |

| 59 | ||

| 60 | ## URL Previews | |

| 61 | See [URL Previews](development/url_previews.md) for documentation on the URL preview | |

| 62 | process. | |

| 63 | ||

| 64 | When generating previews for URLs, Synapse may download and cache various | |

| 65 | resources, including images. These resources are assigned temporary media IDs | |

| 66 | of the form `yyyy-mm-dd_aaaaaaaaaaaaaaaa`, where `yyyy-mm-dd` is the current | |

| 67 | date and `aaaaaaaaaaaaaaaa` is a random sequence of 16 case-sensitive letters. | |

| 68 | ||

| 69 | The metadata for these cached resources is stored in the | |

| 70 | `local_media_repository` and `local_media_repository_url_cache` tables. | |

| 71 | ||

| 72 | Resources for URL previews are deleted after a few days. | |

| 73 | ||

| 74 | ### Paths | |

| 75 | The file with media ID `yyyy-mm-dd_aaaaaaaaaaaaaaaa` and its `128x96` | |

| 76 | `image/jpeg` thumbnail, created by scaling, would be stored at: | |

| 77 | ``` | |

| 78 | url_cache/yyyy-mm-dd/aaaaaaaaaaaaaaaa | |

| 79 | url_cache_thumbnails/yyyy-mm-dd/aaaaaaaaaaaaaaaa/128-96-image-jpeg-scale | |

| 80 | ``` |

| 0 | # Background update controller callbacks | |

| 1 | ||

| 2 | Background update controller callbacks allow module developers to control (e.g. rate-limit) | |

| 3 | how database background updates are run. A database background update is an operation | |

| 4 | Synapse runs on its database in the background after it starts. It's usually used to run | |

| 5 | database operations that would take too long if they were run at the same time as schema | |

| 6 | updates (which are run on startup) and delay Synapse's startup too much: populating a | |

| 7 | table with a big amount of data, adding an index on a big table, deleting superfluous data, | |

| 8 | etc. | |

| 9 | ||

| 10 | Background update controller callbacks can be registered using the module API's | |

| 11 | `register_background_update_controller_callbacks` method. Only the first module (in order | |

| 12 | of appearance in Synapse's configuration file) calling this method can register background | |

| 13 | update controller callbacks, subsequent calls are ignored. | |

| 14 | ||

| 15 | The available background update controller callbacks are: | |

| 16 | ||

| 17 | ### `on_update` | |

| 18 | ||

| 19 | _First introduced in Synapse v1.49.0_ | |

| 20 | ||

| 21 | ```python | |

| 22 | def on_update(update_name: str, database_name: str, one_shot: bool) -> AsyncContextManager[int] | |

| 23 | ``` | |

| 24 | ||

| 25 | Called when about to do an iteration of a background update. The module is given the name | |

| 26 | of the update, the name of the database, and a flag to indicate whether the background | |

| 27 | update will happen in one go and may take a long time (e.g. creating indices). If this last | |

| 28 | argument is set to `False`, the update will be run in batches. | |

| 29 | ||

| 30 | The module must return an async context manager. It will be entered before Synapse runs a | |

| 31 | background update; this should return the desired duration of the iteration, in | |

| 32 | milliseconds. | |

| 33 | ||

| 34 | The context manager will be exited when the iteration completes. Note that the duration | |

| 35 | returned by the context manager is a target, and an iteration may take substantially longer | |

| 36 | or shorter. If the `one_shot` flag is set to `True`, the duration returned is ignored. | |

| 37 | ||

| 38 | __Note__: Unlike most module callbacks in Synapse, this one is _synchronous_. This is | |

| 39 | because asynchronous operations are expected to be run by the async context manager. | |

| 40 | ||

| 41 | This callback is required when registering any other background update controller callback. | |

| 42 | ||

| 43 | ### `default_batch_size` | |

| 44 | ||

| 45 | _First introduced in Synapse v1.49.0_ | |

| 46 | ||

| 47 | ```python | |

| 48 | async def default_batch_size(update_name: str, database_name: str) -> int | |

| 49 | ``` | |

| 50 | ||

| 51 | Called before the first iteration of a background update, with the name of the update and | |

| 52 | of the database. The module must return the number of elements to process in this first | |

| 53 | iteration. | |

| 54 | ||

| 55 | If this callback is not defined, Synapse will use a default value of 100. | |

| 56 | ||

| 57 | ### `min_batch_size` | |

| 58 | ||

| 59 | _First introduced in Synapse v1.49.0_ | |

| 60 | ||

| 61 | ```python | |

| 62 | async def min_batch_size(update_name: str, database_name: str) -> int | |

| 63 | ``` | |

| 64 | ||

| 65 | Called before running a new batch for a background update, with the name of the update and | |

| 66 | of the database. The module must return an integer representing the minimum number of | |

| 67 | elements to process in this iteration. This number must be at least 1, and is used to | |

| 68 | ensure that progress is always made. | |

| 69 | ||

| 70 | If this callback is not defined, Synapse will use a default value of 100. |

| 70 | 70 | ## Registering a callback |

| 71 | 71 | |

| 72 | 72 | Modules can use Synapse's module API to register callbacks. Callbacks are functions that |

| 73 | Synapse will call when performing specific actions. Callbacks must be asynchronous, and | |

| 74 | are split in categories. A single module may implement callbacks from multiple categories, | |

| 75 | and is under no obligation to implement all callbacks from the categories it registers | |

| 76 | callbacks for. | |

| 73 | Synapse will call when performing specific actions. Callbacks must be asynchronous (unless | |

| 74 | specified otherwise), and are split in categories. A single module may implement callbacks | |

| 75 | from multiple categories, and is under no obligation to implement all callbacks from the | |

| 76 | categories it registers callbacks for. | |

| 77 | 77 | |

| 78 | 78 | Modules can register callbacks using one of the module API's `register_[...]_callbacks` |

| 79 | 79 | methods. The callback functions are passed to these methods as keyword arguments, with |

| 80 | the callback name as the argument name and the function as its value. This is demonstrated | |

| 81 | in the example below. A `register_[...]_callbacks` method exists for each category. | |

| 80 | the callback name as the argument name and the function as its value. A | |

| 81 | `register_[...]_callbacks` method exists for each category. | |

| 82 | 82 | |

| 83 | 83 | Callbacks for each category can be found on their respective page of the |

| 84 | 84 | [Synapse documentation website](https://matrix-org.github.io/synapse).⏎ |

| 82 | 82 | |

| 83 | 83 | ### Dex |

| 84 | 84 | |

| 85 | [Dex][dex-idp] is a simple, open-source, certified OpenID Connect Provider. | |

| 85 | [Dex][dex-idp] is a simple, open-source OpenID Connect Provider. | |

| 86 | 86 | Although it is designed to help building a full-blown provider with an |

| 87 | 87 | external database, it can be configured with static passwords in a config file. |

| 88 | 88 | |

| 522 | 522 | email_template: "{{ user.email }}" |

| 523 | 523 | ``` |

| 524 | 524 | |

| 525 | ## Django OAuth Toolkit | |

| 525 | ### Django OAuth Toolkit | |

| 526 | 526 | |

| 527 | 527 | [django-oauth-toolkit](https://github.com/jazzband/django-oauth-toolkit) is a |

| 528 | 528 | Django application providing out of the box all the endpoints, data and logic |

| 0 | ## Summary of performance impact of running on resource constrained devices such as SBCs | |

| 1 | ||

| 2 | I've been running my homeserver on a cubietruck at home now for some time and am often replying to statements like "you need loads of ram to join large rooms" with "it works fine for me". I thought it might be useful to curate a summary of the issues you're likely to run into to help as a scaling-down guide, maybe highlight these for development work or end up as documentation. It seems that once you get up to about 4x1.5GHz arm64 4GiB these issues are no longer a problem. | |

| 3 | ||

| 4 | - **Platform**: 2x1GHz armhf 2GiB ram [Single-board computers](https://wiki.debian.org/CheapServerBoxHardware), SSD, postgres. | |

| 5 | ||

| 6 | ### Presence | |

| 7 | ||

| 8 | This is the main reason people have a poor matrix experience on resource constrained homeservers. Element web will frequently be saying the server is offline while the python process will be pegged at 100% cpu. This feature is used to tell when other users are active (have a client app in the foreground) and therefore more likely to respond, but requires a lot of network activity to maintain even when nobody is talking in a room. | |

| 9 | ||

| 10 |  | |

| 11 | ||

| 12 | While synapse does have some performance issues with presence [#3971](https://github.com/matrix-org/synapse/issues/3971), the fundamental problem is that this is an easy feature to implement for a centralised service at nearly no overhead, but federation makes it combinatorial [#8055](https://github.com/matrix-org/synapse/issues/8055). There is also a client-side config option which disables the UI and idle tracking [enable_presence_by_hs_url] to blacklist the largest instances but I didn't notice much difference, so I recommend disabling the feature entirely at the server level as well. | |

| 13 | ||

| 14 | [enable_presence_by_hs_url]: https://github.com/vector-im/element-web/blob/v1.7.8/config.sample.json#L45 | |

| 15 | ||

| 16 | ### Joining | |

| 17 | ||

| 18 | Joining a "large", federated room will initially fail with the below message in Element web, but waiting a while (10-60mins) and trying again will succeed without any issue. What counts as "large" is not message history, user count, connections to homeservers or even a simple count of the state events, it is instead how long the state resolution algorithm takes. However, each of those numbers are reasonable proxies, so we can use them as estimates since user count is one of the few things you see before joining. | |

| 19 | ||

| 20 |  | |

| 21 | ||

| 22 | This is [#1211](https://github.com/matrix-org/synapse/issues/1211) and will also hopefully be mitigated by peeking [matrix-org/matrix-doc#2753](https://github.com/matrix-org/matrix-doc/pull/2753) so at least you don't need to wait for a join to complete before finding out if it's the kind of room you want. Note that you should first disable presence, otherwise it'll just make the situation worse [#3120](https://github.com/matrix-org/synapse/issues/3120). There is a lot of database interaction too, so make sure you've [migrated your data](../postgres.md) from the default sqlite to postgresql. Personally, I recommend patience - once the initial join is complete there's rarely any issues with actually interacting with the room, but if you like you can just block "large" rooms entirely. | |

| 23 | ||

| 24 | ### Sessions | |

| 25 | ||

| 26 | Anything that requires modifying the device list [#7721](https://github.com/matrix-org/synapse/issues/7721) will take a while to propagate, again taking the client "Offline" until it's complete. This includes signing in and out, editing the public name and verifying e2ee. The main mitigation I recommend is to keep long-running sessions open e.g. by using Firefox SSB "Use this site in App mode" or Chromium PWA "Install Element". | |

| 27 | ||

| 28 | ### Recommended configuration | |

| 29 | ||

| 30 | Put the below in a new file at /etc/matrix-synapse/conf.d/sbc.yaml to override the defaults in homeserver.yaml. | |

| 31 | ||

| 32 | ``` | |

| 33 | # Set to false to disable presence tracking on this homeserver. | |

| 34 | use_presence: false | |

| 35 | ||

| 36 | # When this is enabled, the room "complexity" will be checked before a user | |

| 37 | # joins a new remote room. If it is above the complexity limit, the server will | |

| 38 | # disallow joining, or will instantly leave. | |

| 39 | limit_remote_rooms: | |

| 40 | # Uncomment to enable room complexity checking. | |

| 41 | #enabled: true | |

| 42 | complexity: 3.0 | |

| 43 | ||

| 44 | # Database configuration | |

| 45 | database: | |

| 46 | name: psycopg2 | |

| 47 | args: | |

| 48 | user: matrix-synapse | |

| 49 | # Generate a long, secure one with a password manager | |

| 50 | password: hunter2 | |

| 51 | database: matrix-synapse | |

| 52 | host: localhost | |

| 53 | cp_min: 5 | |

| 54 | cp_max: 10 | |

| 55 | ``` | |

| 56 | ||

| 57 | Currently the complexity is measured by [current_state_events / 500](https://github.com/matrix-org/synapse/blob/v1.20.1/synapse/storage/databases/main/events_worker.py#L986). You can find join times and your most complex rooms like this: | |

| 58 | ||

| 59 | ``` | |

| 60 | admin@homeserver:~$ zgrep '/client/r0/join/' /var/log/matrix-synapse/homeserver.log* | awk '{print $18, $25}' | sort --human-numeric-sort | |

| 61 | 29.922sec/-0.002sec /_matrix/client/r0/join/%23debian-fasttrack%3Apoddery.com | |

| 62 | 182.088sec/0.003sec /_matrix/client/r0/join/%23decentralizedweb-general%3Amatrix.org | |

| 63 | 911.625sec/-570.847sec /_matrix/client/r0/join/%23synapse%3Amatrix.org | |

| 64 | ||

| 65 | admin@homeserver:~$ sudo --user postgres psql matrix-synapse --command 'select canonical_alias, joined_members, current_state_events from room_stats_state natural join room_stats_current where canonical_alias is not null order by current_state_events desc fetch first 5 rows only' | |

| 66 | canonical_alias | joined_members | current_state_events | |

| 67 | -------------------------------+----------------+---------------------- | |

| 68 | #_oftc_#debian:matrix.org | 871 | 52355 | |

| 69 | #matrix:matrix.org | 6379 | 10684 | |

| 70 | #irc:matrix.org | 461 | 3751 | |

| 71 | #decentralizedweb-general:matrix.org | 997 | 1509 | |

| 72 | #whatsapp:maunium.net | 554 | 854 | |

| 73 | ```⏎ |

| 117 | 117 | Note that the appropriate values for those fields depend on the amount |

| 118 | 118 | of free memory the database host has available. |

| 119 | 119 | |

| 120 | Additionally, admins of large deployments might want to consider using huge pages | |

| 121 | to help manage memory, especially when using large values of `shared_buffers`. You | |

| 122 | can read more about that [here](https://www.postgresql.org/docs/10/kernel-resources.html#LINUX-HUGE-PAGES). | |

| 120 | 123 | |

| 121 | 124 | ## Porting from SQLite |

| 122 | 125 |

| 1208 | 1208 | # |

| 1209 | 1209 | #session_lifetime: 24h |

| 1210 | 1210 | |

| 1211 | # Time that an access token remains valid for, if the session is | |

| 1212 | # using refresh tokens. | |

| 1213 | # For more information about refresh tokens, please see the manual. | |

| 1214 | # Note that this only applies to clients which advertise support for | |

| 1215 | # refresh tokens. | |

| 1216 | # | |

| 1217 | # Note also that this is calculated at login time and refresh time: | |

| 1218 | # changes are not applied to existing sessions until they are refreshed. | |

| 1219 | # | |

| 1220 | # By default, this is 5 minutes. | |

| 1221 | # | |

| 1222 | #refreshable_access_token_lifetime: 5m | |

| 1223 | ||

| 1224 | # Time that a refresh token remains valid for (provided that it is not | |

| 1225 | # exchanged for another one first). | |

| 1226 | # This option can be used to automatically log-out inactive sessions. | |

| 1227 | # Please see the manual for more information. | |

| 1228 | # | |

| 1229 | # Note also that this is calculated at login time and refresh time: | |

| 1230 | # changes are not applied to existing sessions until they are refreshed. | |

| 1231 | # | |

| 1232 | # By default, this is infinite. | |

| 1233 | # | |

| 1234 | #refresh_token_lifetime: 24h | |

| 1235 | ||

| 1236 | # Time that an access token remains valid for, if the session is NOT | |

| 1237 | # using refresh tokens. | |

| 1238 | # Please note that not all clients support refresh tokens, so setting | |

| 1239 | # this to a short value may be inconvenient for some users who will | |

| 1240 | # then be logged out frequently. | |

| 1241 | # | |

| 1242 | # Note also that this is calculated at login time: changes are not applied | |

| 1243 | # retrospectively to existing sessions for users that have already logged in. | |

| 1244 | # | |

| 1245 | # By default, this is infinite. | |

| 1246 | # | |

| 1247 | #nonrefreshable_access_token_lifetime: 24h | |

| 1248 | ||

| 1211 | 1249 | # The user must provide all of the below types of 3PID when registering. |

| 1212 | 1250 | # |

| 1213 | 1251 | #registrations_require_3pid: |

| 70 | 70 | * `sender_avatar_url`: the avatar URL (as a `mxc://` URL) for the event's |

| 71 | 71 | sender |

| 72 | 72 | * `sender_hash`: a hash of the user ID of the sender |

| 73 | * `msgtype`: the type of the message | |

| 74 | * `body_text_html`: html representation of the message | |

| 75 | * `body_text_plain`: plaintext representation of the message | |

| 76 | * `image_url`: mxc url of an image, when "msgtype" is "m.image" | |

| 73 | 77 | * `link`: a `matrix.to` link to the room |

| 78 | * `avator_url`: url to the room's avator | |

| 74 | 79 | * `reason`: information on the event that triggered the email to be sent. It's an |

| 75 | 80 | object with the following attributes: |

| 76 | 81 | * `room_id`: the ID of the room the event was sent in |

| 0 | # Federation API | |

| 1 | ||

| 2 | This API allows a server administrator to manage Synapse's federation with other homeservers. | |

| 3 | ||

| 4 | Note: This API is new, experimental and "subject to change". | |

| 5 | ||

| 6 | ## List of destinations | |

| 7 | ||

| 8 | This API gets the current destination retry timing info for all remote servers. | |

| 9 | ||

| 10 | The list contains all the servers with which the server federates, | |

| 11 | regardless of whether an error occurred or not. | |

| 12 | If an error occurs, it may take up to 20 minutes for the error to be displayed here, | |

| 13 | as a complete retry must have failed. | |

| 14 | ||

| 15 | The API is: | |

| 16 | ||

| 17 | A standard request with no filtering: | |

| 18 | ||

| 19 | ``` | |

| 20 | GET /_synapse/admin/v1/federation/destinations | |

| 21 | ``` | |

| 22 | ||

| 23 | A response body like the following is returned: | |

| 24 | ||

| 25 | ```json | |

| 26 | { | |

| 27 | "destinations":[ | |

| 28 | { | |

| 29 | "destination": "matrix.org", | |

| 30 | "retry_last_ts": 1557332397936, | |

| 31 | "retry_interval": 3000000, | |

| 32 | "failure_ts": 1557329397936, | |

| 33 | "last_successful_stream_ordering": null | |

| 34 | } | |

| 35 | ], | |

| 36 | "total": 1 | |

| 37 | } | |

| 38 | ``` | |

| 39 | ||

| 40 | To paginate, check for `next_token` and if present, call the endpoint again | |

| 41 | with `from` set to the value of `next_token`. This will return a new page. | |

| 42 | ||

| 43 | If the endpoint does not return a `next_token` then there are no more destinations | |

| 44 | to paginate through. | |

| 45 | ||

| 46 | **Parameters** | |

| 47 | ||

| 48 | The following query parameters are available: | |

| 49 | ||

| 50 | - `from` - Offset in the returned list. Defaults to `0`. | |

| 51 | - `limit` - Maximum amount of destinations to return. Defaults to `100`. | |

| 52 | - `order_by` - The method in which to sort the returned list of destinations. | |

| 53 | Valid values are: | |

| 54 | - `destination` - Destinations are ordered alphabetically by remote server name. | |

| 55 | This is the default. | |

| 56 | - `retry_last_ts` - Destinations are ordered by time of last retry attempt in ms. | |

| 57 | - `retry_interval` - Destinations are ordered by how long until next retry in ms. | |

| 58 | - `failure_ts` - Destinations are ordered by when the server started failing in ms. | |

| 59 | - `last_successful_stream_ordering` - Destinations are ordered by the stream ordering | |

| 60 | of the most recent successfully-sent PDU. | |

| 61 | - `dir` - Direction of room order. Either `f` for forwards or `b` for backwards. Setting | |

| 62 | this value to `b` will reverse the above sort order. Defaults to `f`. | |

| 63 | ||

| 64 | *Caution:* The database only has an index on the column `destination`. | |

| 65 | This means that if a different sort order is used, | |

| 66 | this can cause a large load on the database, especially for large environments. | |

| 67 | ||

| 68 | **Response** | |

| 69 | ||

| 70 | The following fields are returned in the JSON response body: | |

| 71 | ||

| 72 | - `destinations` - An array of objects, each containing information about a destination. | |

| 73 | Destination objects contain the following fields: | |

| 74 | - `destination` - string - Name of the remote server to federate. | |

| 75 | - `retry_last_ts` - integer - The last time Synapse tried and failed to reach the | |

| 76 | remote server, in ms. This is `0` if the last attempt to communicate with the | |

| 77 | remote server was successful. | |

| 78 | - `retry_interval` - integer - How long since the last time Synapse tried to reach | |

| 79 | the remote server before trying again, in ms. This is `0` if no further retrying occuring. | |

| 80 | - `failure_ts` - nullable integer - The first time Synapse tried and failed to reach the | |

| 81 | remote server, in ms. This is `null` if communication with the remote server has never failed. | |

| 82 | - `last_successful_stream_ordering` - nullable integer - The stream ordering of the most | |

| 83 | recent successfully-sent [PDU](understanding_synapse_through_grafana_graphs.md#federation) | |

| 84 | to this destination, or `null` if this information has not been tracked yet. | |

| 85 | - `next_token`: string representing a positive integer - Indication for pagination. See above. | |

| 86 | - `total` - integer - Total number of destinations. | |

| 87 | ||

| 88 | # Destination Details API | |

| 89 | ||

| 90 | This API gets the retry timing info for a specific remote server. | |

| 91 | ||

| 92 | The API is: | |

| 93 | ||

| 94 | ``` | |

| 95 | GET /_synapse/admin/v1/federation/destinations/<destination> | |

| 96 | ``` | |

| 97 | ||

| 98 | A response body like the following is returned: | |

| 99 | ||

| 100 | ```json | |

| 101 | { | |

| 102 | "destination": "matrix.org", | |

| 103 | "retry_last_ts": 1557332397936, | |

| 104 | "retry_interval": 3000000, | |

| 105 | "failure_ts": 1557329397936, | |

| 106 | "last_successful_stream_ordering": null | |

| 107 | } | |

| 108 | ``` | |

| 109 | ||

| 110 | **Response** | |

| 111 | ||

| 112 | The response fields are the same like in the `destinations` array in | |

| 113 | [List of destinations](#list-of-destinations) response. |

| 0 | ## Admin FAQ | |

| 1 | ||

| 2 | How do I become a server admin? | |

| 3 | --- | |

| 4 | If your server already has an admin account you should use the user admin API to promote other accounts to become admins. See [User Admin API](../../admin_api/user_admin_api.md#Change-whether-a-user-is-a-server-administrator-or-not) | |

| 5 | ||

| 6 | If you don't have any admin accounts yet you won't be able to use the admin API so you'll have to edit the database manually. Manually editing the database is generally not recommended so once you have an admin account, use the admin APIs to make further changes. | |

| 7 | ||

| 8 | ```sql | |

| 9 | UPDATE users SET admin = 1 WHERE name = '@foo:bar.com'; | |

| 10 | ``` | |

| 11 | What servers are my server talking to? | |

| 12 | --- | |

| 13 | Run this sql query on your db: | |

| 14 | ```sql | |

| 15 | SELECT * FROM destinations; | |

| 16 | ``` | |

| 17 | ||

| 18 | What servers are currently participating in this room? | |

| 19 | --- | |

| 20 | Run this sql query on your db: | |

| 21 | ```sql | |

| 22 | SELECT DISTINCT split_part(state_key, ':', 2) | |

| 23 | FROM current_state_events AS c | |

| 24 | INNER JOIN room_memberships AS m USING (room_id, event_id) | |

| 25 | WHERE room_id = '!cURbafjkfsMDVwdRDQ:matrix.org' AND membership = 'join'; | |

| 26 | ``` | |

| 27 | ||

| 28 | What users are registered on my server? | |

| 29 | --- | |

| 30 | ```sql | |

| 31 | SELECT NAME from users; | |

| 32 | ``` | |

| 33 | ||

| 34 | Manually resetting passwords: | |

| 35 | --- | |

| 36 | See https://github.com/matrix-org/synapse/blob/master/README.rst#password-reset | |

| 37 | ||

| 38 | I have a problem with my server. Can I just delete my database and start again? | |

| 39 | --- | |

| 40 | Deleting your database is unlikely to make anything better. | |

| 41 | ||

| 42 | It's easy to make the mistake of thinking that you can start again from a clean slate by dropping your database, but things don't work like that in a federated network: lots of other servers have information about your server. | |

| 43 | ||

| 44 | For example: other servers might think that you are in a room, your server will think that you are not, and you'll probably be unable to interact with that room in a sensible way ever again. | |

| 45 | ||

| 46 | In general, there are better solutions to any problem than dropping the database. Come and seek help in https://matrix.to/#/#synapse:matrix.org. | |

| 47 | ||

| 48 | There are two exceptions when it might be sensible to delete your database and start again: | |

| 49 | * You have *never* joined any rooms which are federated with other servers. For instance, a local deployment which the outside world can't talk to. | |

| 50 | * You are changing the `server_name` in the homeserver configuration. In effect this makes your server a completely new one from the point of view of the network, so in this case it makes sense to start with a clean database. | |

| 51 | (In both cases you probably also want to clear out the media_store.) | |

| 52 | ||

| 53 | I've stuffed up access to my room, how can I delete it to free up the alias? | |

| 54 | --- | |

| 55 | Using the following curl command: | |

| 56 | ``` | |

| 57 | curl -H 'Authorization: Bearer <access-token>' -X DELETE https://matrix.org/_matrix/client/r0/directory/room/<room-alias> | |

| 58 | ``` | |

| 59 | `<access-token>` - can be obtained in riot by looking in the riot settings, down the bottom is: | |

| 60 | Access Token:\<click to reveal\> | |

| 61 | ||

| 62 | `<room-alias>` - the room alias, eg. #my_room:matrix.org this possibly needs to be URL encoded also, for example %23my_room%3Amatrix.org | |

| 63 | ||

| 64 | How can I find the lines corresponding to a given HTTP request in my homeserver log? | |

| 65 | --- | |

| 66 | ||

| 67 | Synapse tags each log line according to the HTTP request it is processing. When it finishes processing each request, it logs a line containing the words `Processed request: `. For example: | |

| 68 | ||

| 69 | ``` | |

| 70 | 2019-02-14 22:35:08,196 - synapse.access.http.8008 - 302 - INFO - GET-37 - ::1 - 8008 - {@richvdh:localhost} Processed request: 0.173sec/0.001sec (0.002sec, 0.000sec) (0.027sec/0.026sec/2) 687B 200 "GET /_matrix/client/r0/sync HTTP/1.1" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36" [0 dbevts]" | |

| 71 | ``` | |

| 72 | ||

| 73 | Here we can see that the request has been tagged with `GET-37`. (The tag depends on the method of the HTTP request, so might start with `GET-`, `PUT-`, `POST-`, `OPTIONS-` or `DELETE-`.) So to find all lines corresponding to this request, we can do: | |

| 74 | ||

| 75 | ``` | |

| 76 | grep 'GET-37' homeserver.log | |

| 77 | ``` | |

| 78 | ||

| 79 | If you want to paste that output into a github issue or matrix room, please remember to surround it with triple-backticks (```) to make it legible (see https://help.github.com/en/articles/basic-writing-and-formatting-syntax#quoting-code). | |

| 80 | ||

| 81 | ||

| 82 | What do all those fields in the 'Processed' line mean? | |

| 83 | --- | |

| 84 | See [Request log format](request_log.md). | |

| 85 | ||

| 86 | ||

| 87 | What are the biggest rooms on my server? | |

| 88 | --- | |

| 89 | ||

| 90 | ```sql | |

| 91 | SELECT s.canonical_alias, g.room_id, count(*) AS num_rows | |

| 92 | FROM | |

| 93 | state_groups_state AS g, | |

| 94 | room_stats_state AS s | |

| 95 | WHERE g.room_id = s.room_id | |

| 96 | GROUP BY s.canonical_alias, g.room_id | |

| 97 | ORDER BY num_rows desc | |

| 98 | LIMIT 10; | |

| 99 | ``` | |

| 100 | ||

| 101 | You can also use the [List Room API](../../admin_api/rooms.md#list-room-api) | |

| 102 | and `order_by` `state_events`. |

| 0 | This blog post by Victor Berger explains how to use many of the tools listed on this page: https://levans.fr/shrink-synapse-database.html | |

| 1 | ||

| 2 | # List of useful tools and scripts for maintenance Synapse database: | |

| 3 | ||

| 4 | ## [Purge Remote Media API](../../admin_api/media_admin_api.md#purge-remote-media-api) | |

| 5 | The purge remote media API allows server admins to purge old cached remote media. | |

| 6 | ||

| 7 | ## [Purge Local Media API](../../admin_api/media_admin_api.md#delete-local-media) | |

| 8 | This API deletes the *local* media from the disk of your own server. | |

| 9 | ||

| 10 | ## [Purge History API](../../admin_api/purge_history_api.md) | |

| 11 | The purge history API allows server admins to purge historic events from their database, reclaiming disk space. | |

| 12 | ||

| 13 | ## [synapse-compress-state](https://github.com/matrix-org/rust-synapse-compress-state) | |

| 14 | Tool for compressing (deduplicating) `state_groups_state` table. | |

| 15 | ||

| 16 | ## [SQL for analyzing Synapse PostgreSQL database stats](useful_sql_for_admins.md) | |

| 17 | Some easy SQL that reports useful stats about your Synapse database.⏎ |

| 0 | # How do State Groups work? | |

| 1 | ||

| 2 | As a general rule, I encourage people who want to understand the deepest darkest secrets of the database schema to drop by #synapse-dev:matrix.org and ask questions. | |

| 3 | ||

| 4 | However, one question that comes up frequently is that of how "state groups" work, and why the `state_groups_state` table gets so big, so here's an attempt to answer that question. | |

| 5 | ||

| 6 | We need to be able to relatively quickly calculate the state of a room at any point in that room's history. In other words, we need to know the state of the room at each event in that room. This is done as follows: | |

| 7 | ||

| 8 | A sequence of events where the state is the same are grouped together into a `state_group`; the mapping is recorded in `event_to_state_groups`. (Technically speaking, since a state event usually changes the state in the room, we are recording the state of the room *after* the given event id: which is to say, to a handwavey simplification, the first event in a state group is normally a state event, and others in the same state group are normally non-state-events.) | |

| 9 | ||

| 10 | `state_groups` records, for each state group, the id of the room that we're looking at, and also the id of the first event in that group. (I'm not sure if that event id is used much in practice.) | |

| 11 | ||

| 12 | Now, if we stored all the room state for each `state_group`, that would be a huge amount of data. Instead, for each state group, we normally store the difference between the state in that group and some other state group, and only occasionally (every 100 state changes or so) record the full state. | |

| 13 | ||

| 14 | So, most state groups have an entry in `state_group_edges` (don't ask me why it's not a column in `state_groups`) which records the previous state group in the room, and `state_groups_state` records the differences in state since that previous state group. | |

| 15 | ||

| 16 | A full state group just records the event id for each piece of state in the room at that point. | |

| 17 | ||

| 18 | ## Known bugs with state groups | |

| 19 | ||

| 20 | There are various reasons that we can end up creating many more state groups than we need: see https://github.com/matrix-org/synapse/issues/3364 for more details. | |

| 21 | ||

| 22 | ## Compression tool | |

| 23 | ||

| 24 | There is a tool at https://github.com/matrix-org/rust-synapse-compress-state which can compress the `state_groups_state` on a room by-room basis (essentially, it reduces the number of "full" state groups). This can result in dramatic reductions of the storage used.⏎ |

| 0 | ## Understanding Synapse through Grafana graphs | |

| 1 | ||

| 2 | It is possible to monitor much of the internal state of Synapse using [Prometheus](https://prometheus.io) | |

| 3 | metrics and [Grafana](https://grafana.com/). | |

| 4 | A guide for configuring Synapse to provide metrics is available [here](../../metrics-howto.md) | |

| 5 | and information on setting up Grafana is [here](https://github.com/matrix-org/synapse/tree/master/contrib/grafana). | |

| 6 | In this setup, Prometheus will periodically scrape the information Synapse provides and | |

| 7 | store a record of it over time. Grafana is then used as an interface to query and | |

| 8 | present this information through a series of pretty graphs. | |

| 9 | ||

| 10 | Once you have grafana set up, and assuming you're using [our grafana dashboard template](https://github.com/matrix-org/synapse/blob/master/contrib/grafana/synapse.json), look for the following graphs when debugging a slow/overloaded Synapse: | |

| 11 | ||

| 12 | ## Message Event Send Time | |

| 13 | ||

| 14 |  | |

| 15 | ||

| 16 | This, along with the CPU and Memory graphs, is a good way to check the general health of your Synapse instance. It represents how long it takes for a user on your homeserver to send a message. | |

| 17 | ||

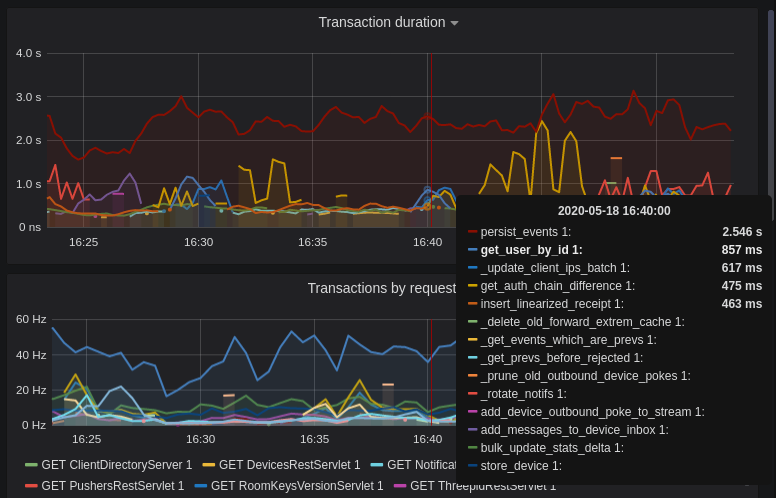

| 18 | ## Transaction Count and Transaction Duration | |

| 19 | ||

| 20 |  | |

| 21 | ||

| 22 |  | |

| 23 | ||

| 24 | These graphs show the database transactions that are occurring the most frequently, as well as those are that are taking the most amount of time to execute. | |

| 25 | ||

| 26 |  | |

| 27 | ||

| 28 | In the first graph, we can see obvious spikes corresponding to lots of `get_user_by_id` transactions. This would be useful information to figure out which part of the Synapse codebase is potentially creating a heavy load on the system. However, be sure to cross-reference this with Transaction Duration, which states that `get_users_by_id` is actually a very quick database transaction and isn't causing as much load as others, like `persist_events`: | |

| 29 | ||

| 30 |  | |

| 31 | ||

| 32 | Still, it's probably worth investigating why we're getting users from the database that often, and whether it's possible to reduce the amount of queries we make by adjusting our cache factor(s). | |

| 33 | ||

| 34 | The `persist_events` transaction is responsible for saving new room events to the Synapse database, so can often show a high transaction duration. | |

| 35 | ||

| 36 | ## Federation | |

| 37 | ||

| 38 | The charts in the "Federation" section show information about incoming and outgoing federation requests. Federation data can be divided into two basic types: | |

| 39 | ||

| 40 | - PDU (Persistent Data Unit) - room events: messages, state events (join/leave), etc. These are permanently stored in the database. | |

| 41 | - EDU (Ephemeral Data Unit) - other data, which need not be stored permanently, such as read receipts, typing notifications. | |

| 42 | ||

| 43 | The "Outgoing EDUs by type" chart shows the EDUs within outgoing federation requests by type: `m.device_list_update`, `m.direct_to_device`, `m.presence`, `m.receipt`, `m.typing`. | |

| 44 | ||

| 45 | If you see a large number of `m.presence` EDUs and are having trouble with too much CPU load, you can disable `presence` in the Synapse config. See also [#3971](https://github.com/matrix-org/synapse/issues/3971). | |

| 46 | ||

| 47 | ## Caches | |

| 48 | ||

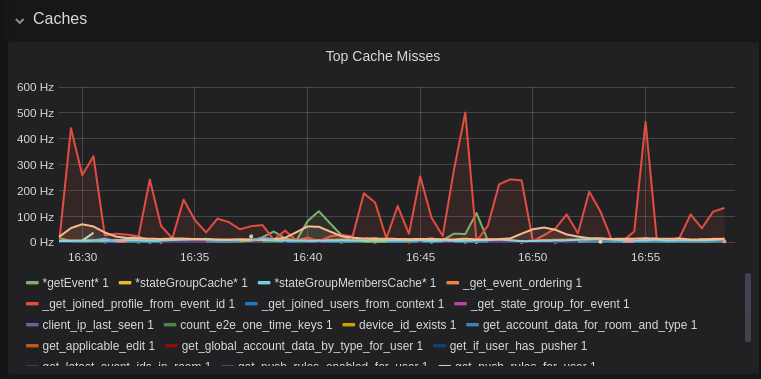

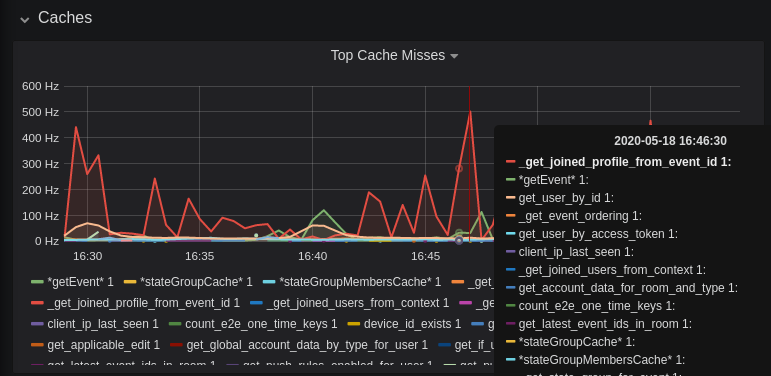

| 49 |  | |

| 50 | ||

| 51 |  | |

| 52 | ||

| 53 | This is quite a useful graph. It shows how many times Synapse attempts to retrieve a piece of data from a cache which the cache did not contain, thus resulting in a call to the database. We can see here that the `_get_joined_profile_from_event_id` cache is being requested a lot, and often the data we're after is not cached. | |

| 54 | ||

| 55 | Cross-referencing this with the Eviction Rate graph, which shows that entries are being evicted from `_get_joined_profile_from_event_id` quite often: | |

| 56 | ||

| 57 |  | |

| 58 | ||

| 59 | we should probably consider raising the size of that cache by raising its cache factor (a multiplier value for the size of an individual cache). Information on doing so is available [here](https://github.com/matrix-org/synapse/blob/ee421e524478c1ad8d43741c27379499c2f6135c/docs/sample_config.yaml#L608-L642) (note that the configuration of individual cache factors through the configuration file is available in Synapse v1.14.0+, whereas doing so through environment variables has been supported for a very long time). Note that this will increase Synapse's overall memory usage. | |

| 60 | ||

| 61 | ## Forward Extremities | |

| 62 | ||

| 63 |  | |

| 64 | ||

| 65 | Forward extremities are the leaf events at the end of a DAG in a room, aka events that have no children. The more that exist in a room, the more [state resolution](https://spec.matrix.org/v1.1/server-server-api/#room-state-resolution) that Synapse needs to perform (hint: it's an expensive operation). While Synapse has code to prevent too many of these existing at one time in a room, bugs can sometimes make them crop up again. | |

| 66 | ||

| 67 | If a room has >10 forward extremities, it's worth checking which room is the culprit and potentially removing them using the SQL queries mentioned in [#1760](https://github.com/matrix-org/synapse/issues/1760). | |

| 68 | ||

| 69 | ## Garbage Collection | |

| 70 | ||

| 71 |  | |

| 72 | ||

| 73 | Large spikes in garbage collection times (bigger than shown here, I'm talking in the | |

| 74 | multiple seconds range), can cause lots of problems in Synapse performance. It's more an | |

| 75 | indicator of problems, and a symptom of other problems though, so check other graphs for what might be causing it. | |

| 76 | ||

| 77 | ## Final Thoughts | |

| 78 | ||

| 79 | If you're still having performance problems with your Synapse instance and you've | |

| 80 | tried everything you can, it may just be a lack of system resources. Consider adding | |

| 81 | more CPU and RAM, and make use of [worker mode](../../workers.md) | |

| 82 | to make use of multiple CPU cores / multiple machines for your homeserver. | |

| 83 |

| 0 | ## Some useful SQL queries for Synapse Admins | |

| 1 | ||

| 2 | ## Size of full matrix db | |

| 3 | `SELECT pg_size_pretty( pg_database_size( 'matrix' ) );` | |

| 4 | ### Result example: | |

| 5 | ``` | |

| 6 | pg_size_pretty | |

| 7 | ---------------- | |

| 8 | 6420 MB | |

| 9 | (1 row) | |

| 10 | ``` | |

| 11 | ## Show top 20 larger rooms by state events count | |

| 12 | ```sql | |

| 13 | SELECT r.name, s.room_id, s.current_state_events | |

| 14 | FROM room_stats_current s | |

| 15 | LEFT JOIN room_stats_state r USING (room_id) | |

| 16 | ORDER BY current_state_events DESC | |

| 17 | LIMIT 20; | |

| 18 | ``` | |

| 19 | ||

| 20 | and by state_group_events count: | |

| 21 | ```sql | |

| 22 | SELECT rss.name, s.room_id, count(s.room_id) FROM state_groups_state s | |

| 23 | LEFT JOIN room_stats_state rss USING (room_id) | |

| 24 | GROUP BY s.room_id, rss.name | |

| 25 | ORDER BY count(s.room_id) DESC | |

| 26 | LIMIT 20; | |

| 27 | ``` | |

| 28 | plus same, but with join removed for performance reasons: | |

| 29 | ```sql | |

| 30 | SELECT s.room_id, count(s.room_id) FROM state_groups_state s | |

| 31 | GROUP BY s.room_id | |

| 32 | ORDER BY count(s.room_id) DESC | |

| 33 | LIMIT 20; | |

| 34 | ``` | |

| 35 | ||

| 36 | ## Show top 20 larger tables by row count | |

| 37 | ```sql | |

| 38 | SELECT relname, n_live_tup as rows | |

| 39 | FROM pg_stat_user_tables | |

| 40 | ORDER BY n_live_tup DESC | |

| 41 | LIMIT 20; | |

| 42 | ``` | |

| 43 | This query is quick, but may be very approximate, for exact number of rows use `SELECT COUNT(*) FROM <table_name>`. | |

| 44 | ### Result example: | |

| 45 | ``` | |

| 46 | state_groups_state - 161687170 | |

| 47 | event_auth - 8584785 | |

| 48 | event_edges - 6995633 | |

| 49 | event_json - 6585916 | |

| 50 | event_reference_hashes - 6580990 | |

| 51 | events - 6578879 | |

| 52 | received_transactions - 5713989 | |

| 53 | event_to_state_groups - 4873377 | |

| 54 | stream_ordering_to_exterm - 4136285 | |

| 55 | current_state_delta_stream - 3770972 | |

| 56 | event_search - 3670521 | |

| 57 | state_events - 2845082 | |

| 58 | room_memberships - 2785854 | |

| 59 | cache_invalidation_stream - 2448218 | |

| 60 | state_groups - 1255467 | |

| 61 | state_group_edges - 1229849 | |

| 62 | current_state_events - 1222905 | |

| 63 | users_in_public_rooms - 364059 | |

| 64 | device_lists_stream - 326903 | |

| 65 | user_directory_search - 316433 | |

| 66 | ``` | |

| 67 | ||

| 68 | ## Show top 20 rooms by new events count in last 1 day: | |

| 69 | ```sql | |

| 70 | SELECT e.room_id, r.name, COUNT(e.event_id) cnt FROM events e | |

| 71 | LEFT JOIN room_stats_state r USING (room_id) | |

| 72 | WHERE e.origin_server_ts >= DATE_PART('epoch', NOW() - INTERVAL '1 day') * 1000 GROUP BY e.room_id, r.name ORDER BY cnt DESC LIMIT 20; | |

| 73 | ``` | |

| 74 | ||

| 75 | ## Show top 20 users on homeserver by sent events (messages) at last month: | |

| 76 | ```sql | |

| 77 | SELECT user_id, SUM(total_events) | |

| 78 | FROM user_stats_historical | |

| 79 | WHERE TO_TIMESTAMP(end_ts/1000) AT TIME ZONE 'UTC' > date_trunc('day', now() - interval '1 month') | |

| 80 | GROUP BY user_id | |

| 81 | ORDER BY SUM(total_events) DESC | |

| 82 | LIMIT 20; | |

| 83 | ``` | |

| 84 | ||

| 85 | ## Show last 100 messages from needed user, with room names: | |

| 86 | ```sql | |

| 87 | SELECT e.room_id, r.name, e.event_id, e.type, e.content, j.json FROM events e | |

| 88 | LEFT JOIN event_json j USING (room_id) | |

| 89 | LEFT JOIN room_stats_state r USING (room_id) | |

| 90 | WHERE sender = '@LOGIN:example.com' | |

| 91 | AND e.type = 'm.room.message' | |

| 92 | ORDER BY stream_ordering DESC | |

| 93 | LIMIT 100; | |

| 94 | ``` | |

| 95 | ||

| 96 | ## Show top 20 larger tables by storage size | |

| 97 | ```sql | |

| 98 | SELECT nspname || '.' || relname AS "relation", | |

| 99 | pg_size_pretty(pg_total_relation_size(C.oid)) AS "total_size" | |

| 100 | FROM pg_class C | |

| 101 | LEFT JOIN pg_namespace N ON (N.oid = C.relnamespace) | |

| 102 | WHERE nspname NOT IN ('pg_catalog', 'information_schema') | |

| 103 | AND C.relkind <> 'i' | |

| 104 | AND nspname !~ '^pg_toast' | |

| 105 | ORDER BY pg_total_relation_size(C.oid) DESC | |

| 106 | LIMIT 20; | |

| 107 | ``` | |

| 108 | ### Result example: | |

| 109 | ``` | |

| 110 | public.state_groups_state - 27 GB | |

| 111 | public.event_json - 9855 MB | |

| 112 | public.events - 3675 MB | |

| 113 | public.event_edges - 3404 MB | |

| 114 | public.received_transactions - 2745 MB | |

| 115 | public.event_reference_hashes - 1864 MB | |

| 116 | public.event_auth - 1775 MB | |

| 117 | public.stream_ordering_to_exterm - 1663 MB | |

| 118 | public.event_search - 1370 MB | |

| 119 | public.room_memberships - 1050 MB | |

| 120 | public.event_to_state_groups - 948 MB | |

| 121 | public.current_state_delta_stream - 711 MB | |

| 122 | public.state_events - 611 MB | |

| 123 | public.presence_stream - 530 MB | |

| 124 | public.current_state_events - 525 MB | |

| 125 | public.cache_invalidation_stream - 466 MB | |

| 126 | public.receipts_linearized - 279 MB | |

| 127 | public.state_groups - 160 MB | |

| 128 | public.device_lists_remote_cache - 124 MB | |

| 129 | public.state_group_edges - 122 MB | |

| 130 | ``` | |

| 131 | ||

| 132 | ## Show rooms with names, sorted by events in this rooms | |

| 133 | `echo "select event_json.room_id,room_stats_state.name from event_json,room_stats_state where room_stats_state.room_id=event_json.room_id" | psql synapse | sort | uniq -c | sort -n` | |

| 134 | ### Result example: | |

| 135 | ``` | |

| 136 | 9459 !FPUfgzXYWTKgIrwKxW:matrix.org | This Week in Matrix | |

| 137 | 9459 !FPUfgzXYWTKgIrwKxW:matrix.org | This Week in Matrix (TWIM) | |

| 138 | 17799 !iDIOImbmXxwNngznsa:matrix.org | Linux in Russian | |

| 139 | 18739 !GnEEPYXUhoaHbkFBNX:matrix.org | Riot Android | |

| 140 | 23373 !QtykxKocfZaZOUrTwp:matrix.org | Matrix HQ | |

| 141 | 39504 !gTQfWzbYncrtNrvEkB:matrix.org | ru.[matrix] | |

| 142 | 43601 !iNmaIQExDMeqdITdHH:matrix.org | Riot | |

| 143 | 43601 !iNmaIQExDMeqdITdHH:matrix.org | Riot Web/Desktop | |

| 144 | ``` | |

| 145 | ||

| 146 | ## Lookup room state info by list of room_id | |

| 147 | ```sql | |

| 148 | SELECT rss.room_id, rss.name, rss.canonical_alias, rss.topic, rss.encryption, rsc.joined_members, rsc.local_users_in_room, rss.join_rules | |

| 149 | FROM room_stats_state rss | |

| 150 | LEFT JOIN room_stats_current rsc USING (room_id) | |

| 151 | WHERE room_id IN (WHERE room_id IN ( | |

| 152 | '!OGEhHVWSdvArJzumhm:matrix.org', | |

| 153 | '!YTvKGNlinIzlkMTVRl:matrix.org' | |

| 154 | ) | |

| 155 | ```⏎ |

| 209 | 209 | ^/_matrix/federation/v1/get_groups_publicised$ |

| 210 | 210 | ^/_matrix/key/v2/query |

| 211 | 211 | ^/_matrix/federation/unstable/org.matrix.msc2946/spaces/ |

| 212 | ^/_matrix/federation/unstable/org.matrix.msc2946/hierarchy/ | |

| 212 | ^/_matrix/federation/(v1|unstable/org.matrix.msc2946)/hierarchy/ | |

| 213 | 213 | |

| 214 | 214 | # Inbound federation transaction request |

| 215 | 215 | ^/_matrix/federation/v1/send/ |

| 222 | 222 | ^/_matrix/client/(api/v1|r0|v3|unstable)/rooms/.*/members$ |

| 223 | 223 | ^/_matrix/client/(api/v1|r0|v3|unstable)/rooms/.*/state$ |

| 224 | 224 | ^/_matrix/client/unstable/org.matrix.msc2946/rooms/.*/spaces$ |

| 225 | ^/_matrix/client/unstable/org.matrix.msc2946/rooms/.*/hierarchy$ | |

| 225 | ^/_matrix/client/(v1|unstable/org.matrix.msc2946)/rooms/.*/hierarchy$ | |

| 226 | 226 | ^/_matrix/client/unstable/im.nheko.summary/rooms/.*/summary$ |

| 227 | 227 | ^/_matrix/client/(api/v1|r0|v3|unstable)/account/3pid$ |

| 228 | 228 | ^/_matrix/client/(api/v1|r0|v3|unstable)/devices$ |

| 32 | 32 | |synapse/storage/databases/main/event_federation.py |

| 33 | 33 | |synapse/storage/databases/main/event_push_actions.py |

| 34 | 34 | |synapse/storage/databases/main/events_bg_updates.py |

| 35 | |synapse/storage/databases/main/events_worker.py | |

| 36 | 35 | |synapse/storage/databases/main/group_server.py |

| 37 | 36 | |synapse/storage/databases/main/metrics.py |

| 38 | 37 | |synapse/storage/databases/main/monthly_active_users.py |

| 86 | 85 | |tests/push/test_presentable_names.py |

| 87 | 86 | |tests/push/test_push_rule_evaluator.py |

| 88 | 87 | |tests/rest/admin/test_admin.py |

| 89 | |tests/rest/admin/test_device.py | |

| 90 | |tests/rest/admin/test_media.py | |

| 91 | |tests/rest/admin/test_server_notice.py | |

| 92 | 88 | |tests/rest/admin/test_user.py |

| 93 | 89 | |tests/rest/admin/test_username_available.py |

| 94 | 90 | |tests/rest/client/test_account.py |

| 111 | 107 | |tests/server_notices/test_resource_limits_server_notices.py |

| 112 | 108 | |tests/state/test_v2.py |

| 113 | 109 | |tests/storage/test_account_data.py |

| 114 | |tests/storage/test_appservice.py | |

| 115 | 110 | |tests/storage/test_background_update.py |

| 116 | 111 | |tests/storage/test_base.py |

| 117 | 112 | |tests/storage/test_client_ips.py |

| 124 | 119 | |tests/test_server.py |

| 125 | 120 | |tests/test_state.py |

| 126 | 121 | |tests/test_terms_auth.py |

| 127 | |tests/test_visibility.py | |

| 128 | 122 | |tests/unittest.py |

| 129 | 123 | |tests/util/caches/test_cached_call.py |

| 130 | 124 | |tests/util/caches/test_deferred_cache.py |

| 159 | 153 | [mypy-synapse.events.*] |

| 160 | 154 | disallow_untyped_defs = True |

| 161 | 155 | |

| 156 | [mypy-synapse.federation.*] | |

| 157 | disallow_untyped_defs = True | |

| 158 | ||

| 159 | [mypy-synapse.federation.transport.client] | |

| 160 | disallow_untyped_defs = False | |

| 161 | ||

| 162 | 162 | [mypy-synapse.handlers.*] |

| 163 | 163 | disallow_untyped_defs = True |

| 164 | 164 | |

| 165 | 165 | [mypy-synapse.metrics.*] |

| 166 | 166 | disallow_untyped_defs = True |

| 167 | 167 | |

| 168 | [mypy-synapse.module_api.*] | |

| 169 | disallow_untyped_defs = True | |

| 170 | ||

| 168 | 171 | [mypy-synapse.push.*] |

| 169 | 172 | disallow_untyped_defs = True |

| 170 | 173 | |

| 181 | 184 | disallow_untyped_defs = True |

| 182 | 185 | |

| 183 | 186 | [mypy-synapse.storage.databases.main.directory] |

| 187 | disallow_untyped_defs = True | |

| 188 | ||

| 189 | [mypy-synapse.storage.databases.main.events_worker] | |

| 184 | 190 | disallow_untyped_defs = True |

| 185 | 191 | |

| 186 | 192 | [mypy-synapse.storage.databases.main.room_batch] |

| 218 | 224 | |

| 219 | 225 | [mypy-tests.rest.client.test_directory] |

| 220 | 226 | disallow_untyped_defs = True |

| 227 | ||

| 228 | [mypy-tests.federation.transport.test_client] | |

| 229 | disallow_untyped_defs = True | |

| 230 | ||

| 221 | 231 | |

| 222 | 232 | ;; Dependencies without annotations |

| 223 | 233 | ;; Before ignoring a module, check to see if type stubs are available. |

| 64 | 64 | fi |

| 65 | 65 | |

| 66 | 66 | # Run the tests! |

| 67 | go test -v -tags synapse_blacklist,msc2946,msc3083,msc2403 -count=1 $EXTRA_COMPLEMENT_ARGS ./tests/... | |

| 67 | go test -v -tags synapse_blacklist,msc2403 -count=1 $EXTRA_COMPLEMENT_ARGS ./tests/... |

| 14 | 14 | # See the License for the specific language governing permissions and |

| 15 | 15 | # limitations under the License. |

| 16 | 16 | |

| 17 | ||

| 18 | """ | |

| 19 | Script for signing and sending federation requests. | |

| 20 | ||

| 21 | Some tips on doing the join dance with this: | |

| 22 | ||

| 23 | room_id=... | |

| 24 | user_id=... | |

| 25 | ||

| 26 | # make_join | |

| 27 | federation_client.py "/_matrix/federation/v1/make_join/$room_id/$user_id?ver=5" > make_join.json | |

| 28 | ||

| 29 | # sign | |

| 30 | jq -M .event make_join.json | sign_json --sign-event-room-version=$(jq -r .room_version make_join.json) -o signed-join.json | |

| 31 | ||

| 32 | # send_join | |

| 33 | federation_client.py -X PUT "/_matrix/federation/v2/send_join/$room_id/x" --body $(<signed-join.json) > send_join.json | |

| 34 | """ | |

| 35 | ||

| 17 | 36 | import argparse |

| 18 | 37 | import base64 |

| 19 | 38 | import json |

| 21 | 21 | from signedjson.key import read_signing_keys |

| 22 | 22 | from signedjson.sign import sign_json |

| 23 | 23 | |

| 24 | from synapse.api.room_versions import KNOWN_ROOM_VERSIONS | |